In today’s fast-paced world, artificial intelligence (AI) is changing how we live and work. While it offers incredible benefits, there’s a hidden side to this technology called Shadow AI. Let’s dive into what Shadow AI is and how we can balance innovation with security. AI is rapidly transforming industries, boosting productivity, and reshaping how we work.

Will robots take over the world? Exploring the controversy, while some organizations fully integrate AI, others use it moderately, sparking debate about the future role of humans alongside robots.

What is Shadow AI?

Shadow AI refers to the use of AI tools and applications that are not officially approved or monitored by an organization. Imagine a worker using a chatbot or data analysis tool that their company hasn’t authorized. This can happen when employees want to find faster or better ways to do their jobs but need to follow the rules set by their workplace.

Automation streamlines data management, making it faster and more efficient. Organizations are leveraging these tools to handle vast amounts of information with precision. As technology evolves, businesses continue to benefit from improved accuracy and reduced manual workloads. While Shadow AI can lead to creativity and new ideas, it can also pose risks. Since these tools are unregulated, they may not follow the company’s security protocols, leading to potential data leaks or misuse of sensitive information.

Why Do People Use Shadow AI?

- Convenience: Employees often seek out easy-to-use tools that help them complete tasks quickly. Shadow AI allows them to bypass traditional processes.

- Speed: In many cases, employees want results fast. They might turn to Shadow AI for quick fixes instead of waiting for official tools to be implemented.

- Innovation: New tools can inspire creative solutions to problems. Shadow AI can foster innovation even if it isn’t part of the approved workflow.

Current Context and Usage Statistics

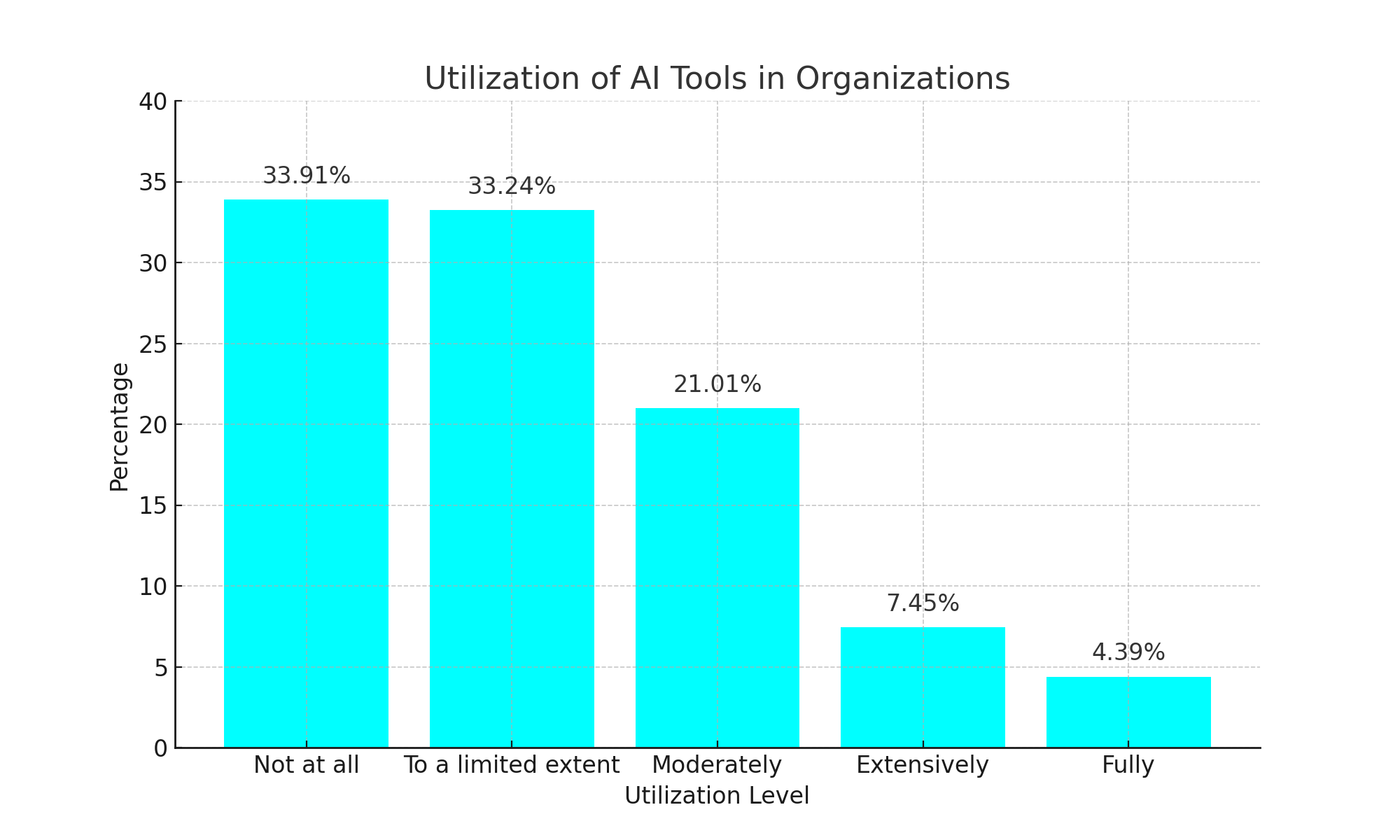

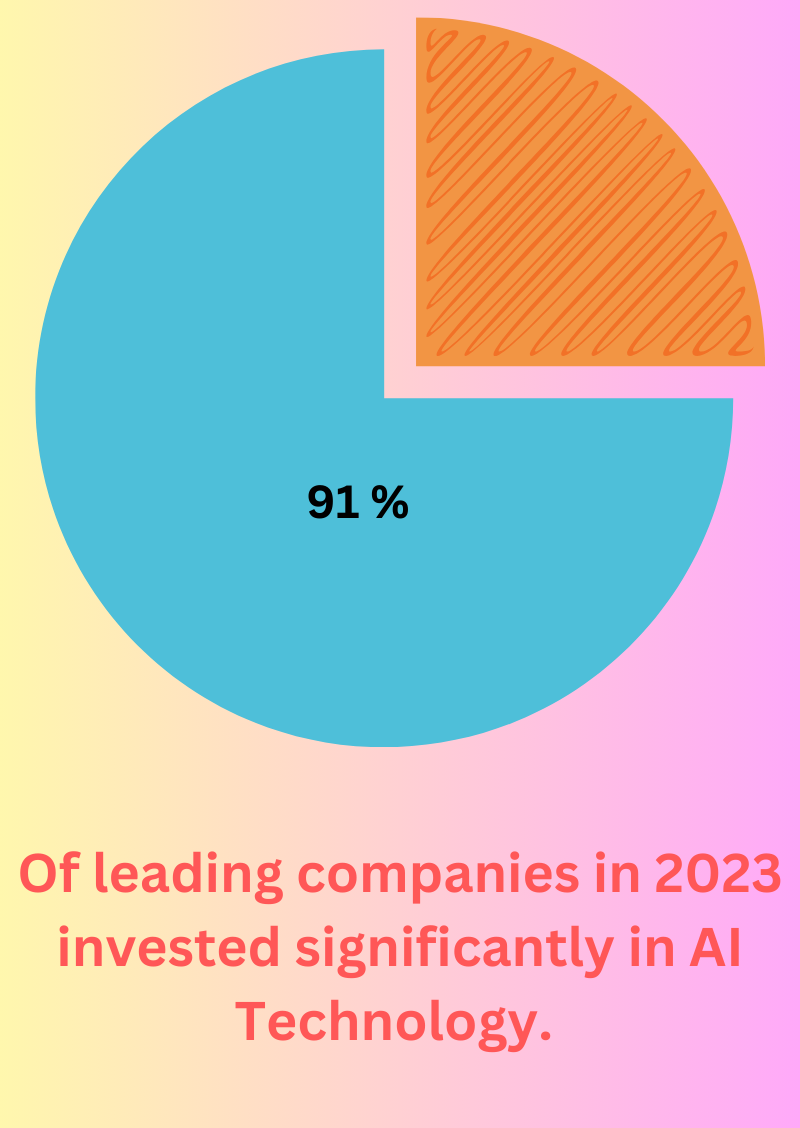

- Prevalence: While 4.39% of companies have fully integrated AI tools, approximately 44% of employees utilize AI in their work, with 28% using these tools unsupervised. This suggests a significant prevalence of Shadow AI, indicating a disconnect between executive caution and employee usage.

- Executive Awareness: Only 45% of executives approach AI cautiously, underscoring a gap in awareness and management strategies regarding the rapid adoption of AI technologies. As organizations increasingly rely on AI, a notable disconnect exists between executives and employees. For example, while approximately 75% of employees use AI at work, only 45% of executives are cautious about its implementation. This gap highlights the urgent need to address Shadow AI.

According to Tech. co’s 2024 report on the impact of technology on the workplace, only 4.39% of companies have fully integrated AI tools throughout their organization. That’s just 1 in 25 companies that have firm AI guidelines.

Risks Associated with Shadow AI

- Data Privacy Violations: Unauthorized AI usage can expose sensitive company information and lead to data breaches.

- Regulatory Non-Compliance: Shadow AI may result in failing to meet regulatory standards, such as the EU AI Act, which sets out rules for the ethical and responsible use of AI, and the GDPR, which regulates data protection and privacy. Compliance with these regulations is crucial in the use of AI to ensure ethical and responsible practices.

- Misinformation: The risk of AI-generated misinformation can impact internal reports and communications with clients, with 49% of senior leaders expressing concern over this issue.

- Cybersecurity Vulnerabilities: AI tools used in coding can introduce bugs or vulnerabilities, posing potential security risks to organizations.

Shadow AI, which includes unauthorized use of AI tools, data security vulnerabilities, and lack of oversight. Without proper governance, Shadow AI can lead to ethical issues and compliance risks.

AI Ethics, which includes standards like transparency, fairness, and accountability in AI use, is crucial in the context of Shadow AI to prevent misuse and negative consequences.

Difference from Shadow IT And Impact on IT Governance

Evolution

While Shadow IT involves using unapproved tools to access corporate data, Shadow AI refers explicitly to the unsanctioned use of AI technologies that are increasingly integrated into both personal and professional workflows.

Governance Challenges

Shadow AI poses significant governance challenges for IT departments, which must balance employee productivity and innovation with security and compliance needs. The potential risks of Shadow AI, such as data breaches, regulatory non-compliance, and loss of intellectual property, highlight the need for robust governance frameworks to monitor AI activities effectively.

Mitigation Strategies

Establish Clear Policies

Organizations should develop comprehensive guidelines for AI usage, outlining specific tasks and roles where AI can be employed to mitigate risks. For instance, AI can be used in data analysis to identify potential security threats or in customer service to automate routine tasks, thereby reducing the risk of human error.

Communication

Proactive communication about risks and policies is essential. It ensures that employees are involved and well-informed, understanding the implications of using unsanctioned AI tools and their role in maintaining AI governance.

Training Programs

Implementing training programs is crucial. It helps educate employees on responsible AI use, making them feel prepared and competent and reinforcing the importance of compliance and risk awareness in AI governance.

Access Control and Monitoring

Establishing strict access controls and monitoring AI usage helps organizations manage who can utilize AI solutions and ensures compliance with established guidelines.

Long-term Strategy

- Centralization of AI Solutions: Organizations should consider centralizing AI technologies to limit Shadow AI and enhance their ability to monitor, control, and scale AI applications effectively.

- Partnerships: Collaborating with specialized companies can provide insights and support for organizations navigating the complexities of AI integration.

How to Balance Innovation and Security

Balancing innovation and security is vital in today’s tech-driven world. Organizations should encourage creativity while implementing strong security measures to protect data and infrastructure.

If we look deep into that, a question like, what does the future hold for the kids? Preparing children for a tech-centric future requires equipping them with critical thinking and digital literacy skills. By emphasizing security awareness alongside education, we empower the next generation to embrace innovation responsibly while safeguarding their digital lives.

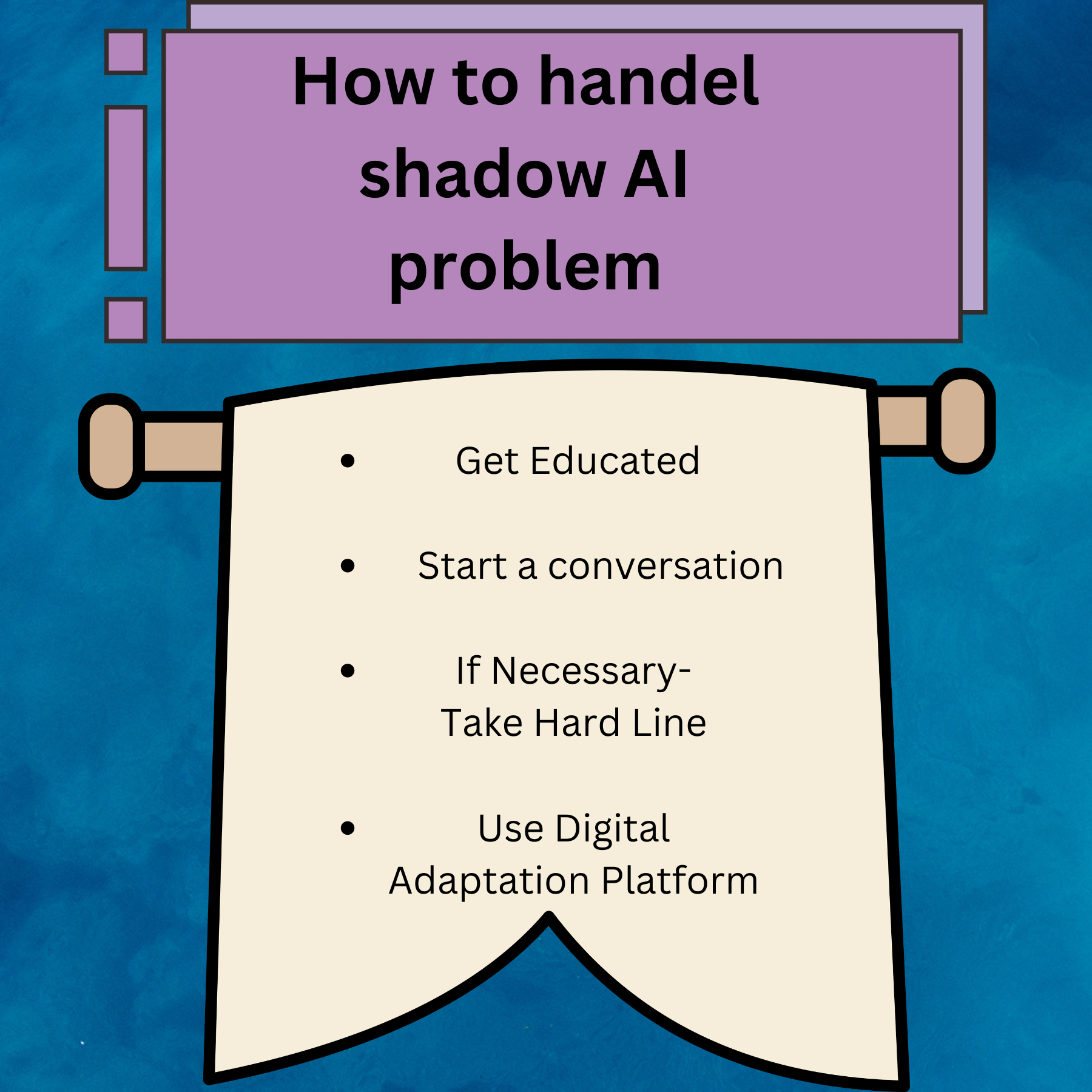

To enjoy the benefits of AI while keeping security in mind, organizations can take several steps:

- Create Clear Policies: Companies should develop clear guidelines about which AI tools are allowed. Employees need to know what is acceptable and what isn’t.

- Encourage Communication: Foster a culture where employees feel comfortable discussing their needs and concerns about AI tools. Open communication can lead to better solutions.

- Provide Approved Tools: Offer a variety of secure and effective approved AI tools. When employees have access to the right resources, they are less likely to seek out unapproved options.

- Training and Education: Train employees on the importance of data security and the risks of using unapproved tools. Knowledge is key to making informed decisions.

- Monitor Usage: Monitor employees’ tool use. This can help identify Shadow AI and address issues before they become serious problems.

Conclusion

Shadow AI presents both opportunities and challenges. While it can lead to innovation and efficiency, it also brings risks to data security and compliance. By creating clear policies, encouraging communication, and providing approved tools, organizations can strike a balance between embracing new technologies and protecting their valuable information. In this age of AI, finding this balance is essential for success and security.

Moonpreneur is on a mission to disrupt traditional education and future-proof the next generation with holistic learning solutions. Its Innovator Program is building tomorrow’s workforce by training students in AI/ML, Robotics, Coding, IoT, and Apps, enabling entrepreneurship through experiential learning.

While this article does a great job of explaining Shadow AI, I feel like it misses a key aspect: how can companies realistically keep up with Shadow AI when new tools are popping up almost daily? And what about employees who genuinely need these tools to keep up with workloads but lack approved resources? Isn’t there a risk that strict governance might actually slow down innovation or frustrate teams? Curious to know if there’s a practical balance beyond just policy!

Great question! Balancing the rise of Shadow AI with company governance is tough. With new tools emerging constantly, strict control can indeed slow down innovation and frustrate employees who need these tools to handle workloads efficiently. Instead of rigid rules, companies might find it more practical to offer a sandbox approach—creating a safe, limited environment where employees can test tools. This way, companies stay updated on what’s being used and employees get the flexibility to explore solutions that make them more productive. It’s about finding a balance between keeping data secure and empowering teams with the tools they genuinely need.

One interesting aspect I’ve come across is the role of ‘ethical AI guidelines’ in curbing Shadow AI, like those recently emphasized by Google’s AI principles. They suggest adding a layer of transparency in every AI tool—like tracking user activity to ensure secure use. This could be a great addition to help companies safely explore AI while managing risks.